September 4, 2024

Use an LLM and AI to filter a Retool table with natural language

This tutorial was originally written for and published on the Retool Blog on May 23, 2024. We're cross-posting it here now that we have a blog 😊

It was also the inception of another project, AskYourData, which is a work-in-progress.

When I used the “code interpreter” in ChatGPT for the first time to interact with a CSV, I realized that something significant was happening with this new capability.

The chat flow, featuring questions and statistically backed answers with charts, is a powerful paradigm in data exploration.

It reminds me of the flow found in a data notebook, where snippets of code generate “answers” in the form of tables, charts and logs. The difference lies in the form of the questions; they are expressed in natural language rather than written code.

Data exploration is crucial for businesses. It’s why dashboards and internal tools are so prevalent and critical for companies everywhere.

However, in this context, a chat flow may not be the most effective approach. Imagine a customer support representative trying to find specific customer information by querying a database with multiple questions, similar to a ChatGPT interaction. They need to be able to ask once and receive the correct answer immediately.

Typically, such questions are made by adjusting filter parameters. But what if these parameters could be set directly from a support rep’s question written in natural language?

We built this prototype with the following assumption: SQL is the programming language closest to natural language. And GPT-4 is very good at converting natural language into SQL.

What if we could convert a user’s question into the correct SQL used to filter a given dataset? Furthermore, what if we query non-SQL sources by using the “Query JSON with SQL” resource, which employs AlaSQL to filter datasets using the generated SQL?

You’ll need a free Retool account and an OpenAI API key (with active credits) in order to ask GPT-4 to convert the user text into an SQL string.

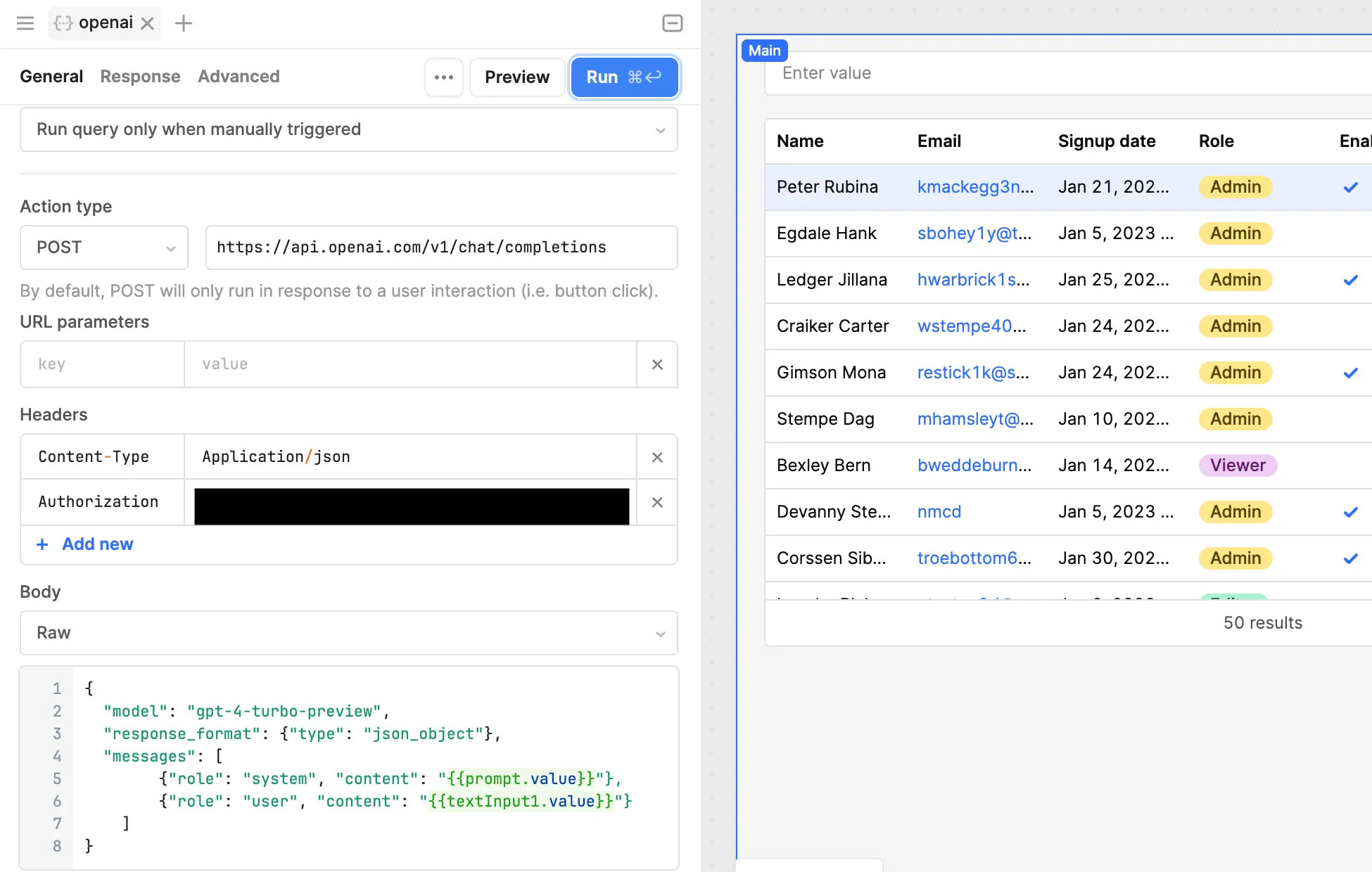

We use the OpenAI API because we need to set the “response_format” as “json_object.” This is something we can’t control out of the box with Retool AI actions. I hope this is something they will add in the future.

Then, a bit of “glue”—some JavaScript transformers are used to properly package the prompt to send to the GPT-4 endpoint as well as parsing its response for our purpose.

Here’s a step by step on the implementation details to add and configure a natural language filter for a Retool Table component.

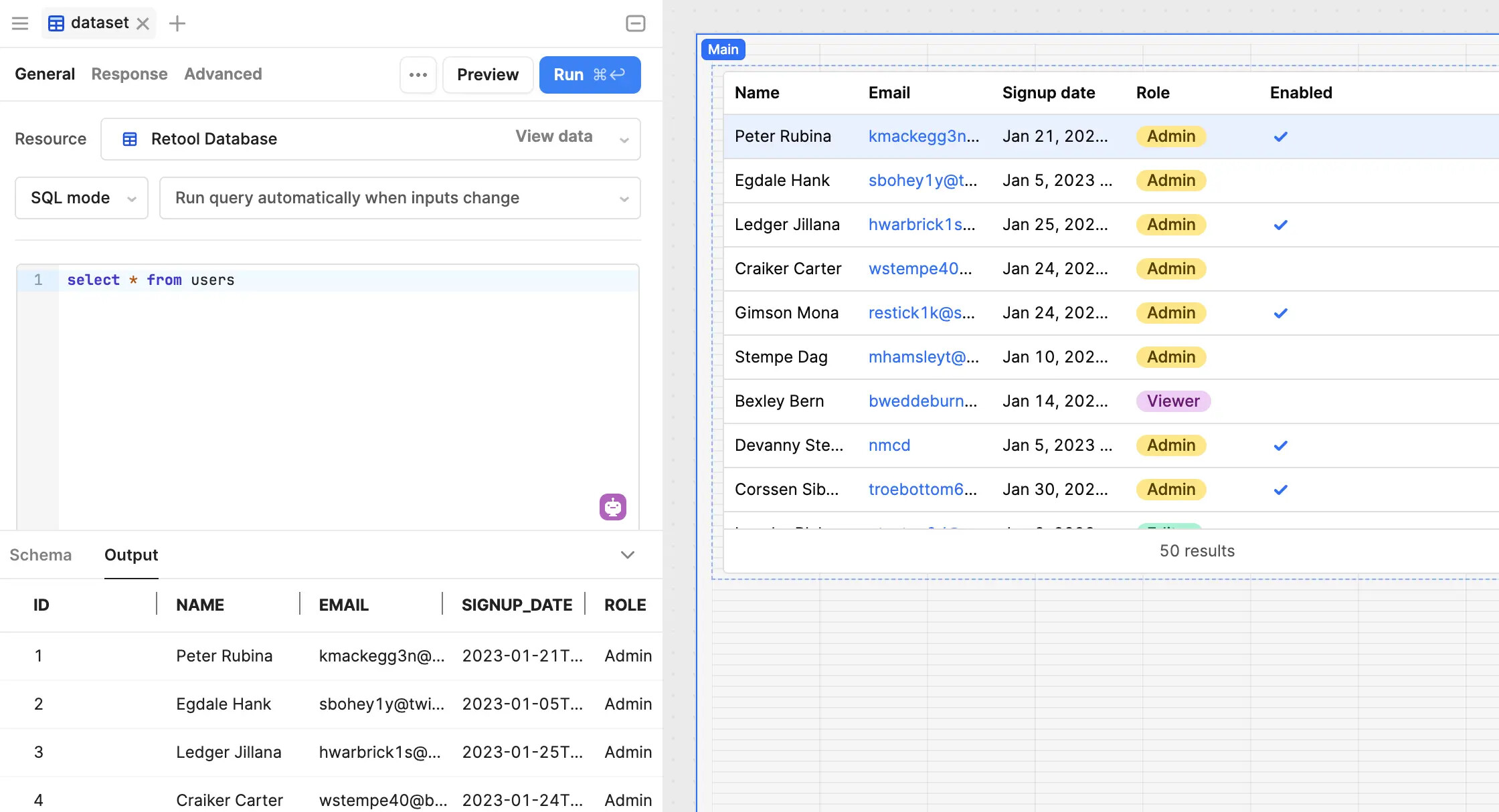

First, drag the Retool Table component onto the canvas—then let’s get some data. We're using the “sample_users” table included in Retool Database to create a simple example that’s easy to grasp, allowing you to extend it further for your specific use case.

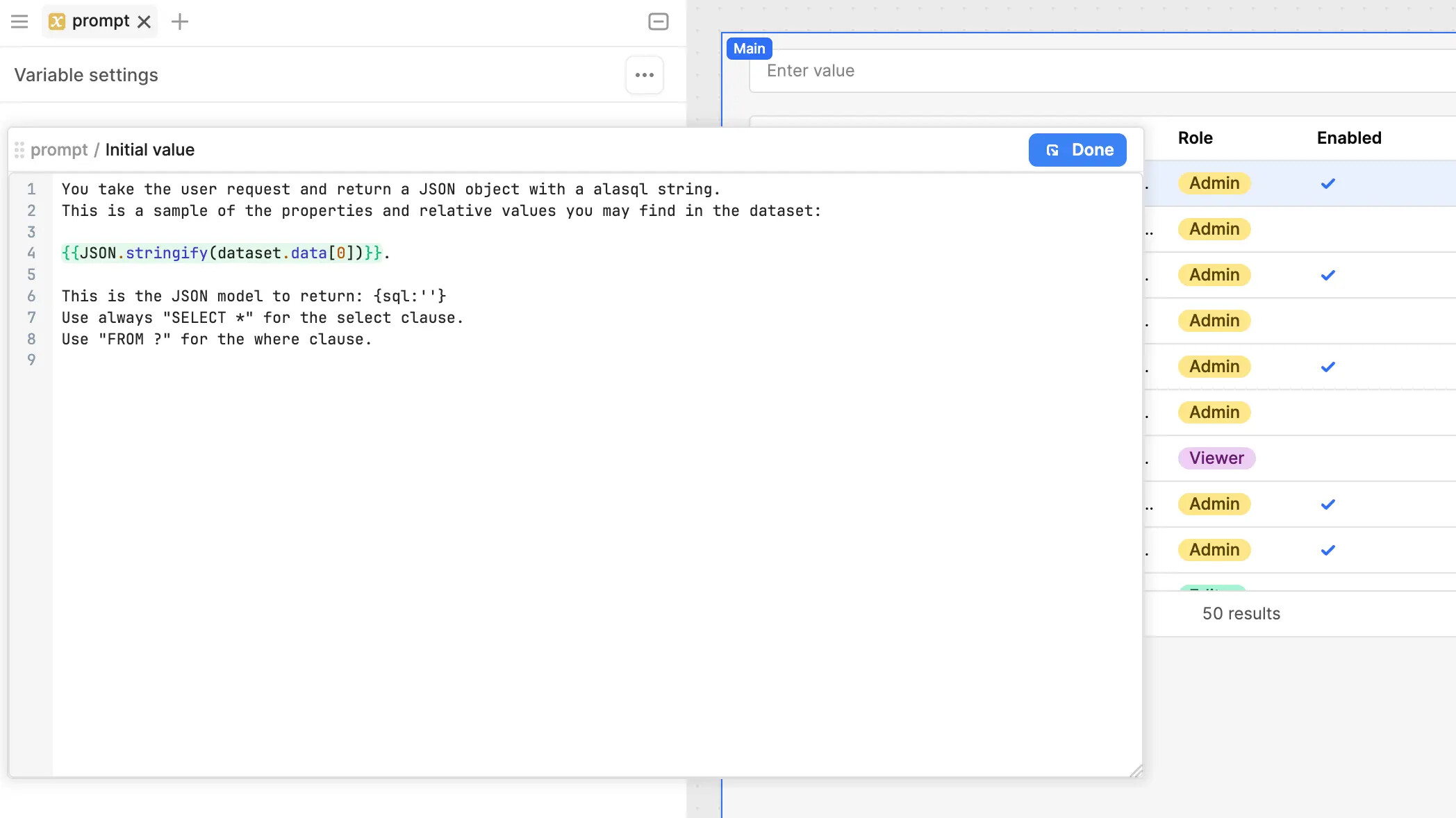

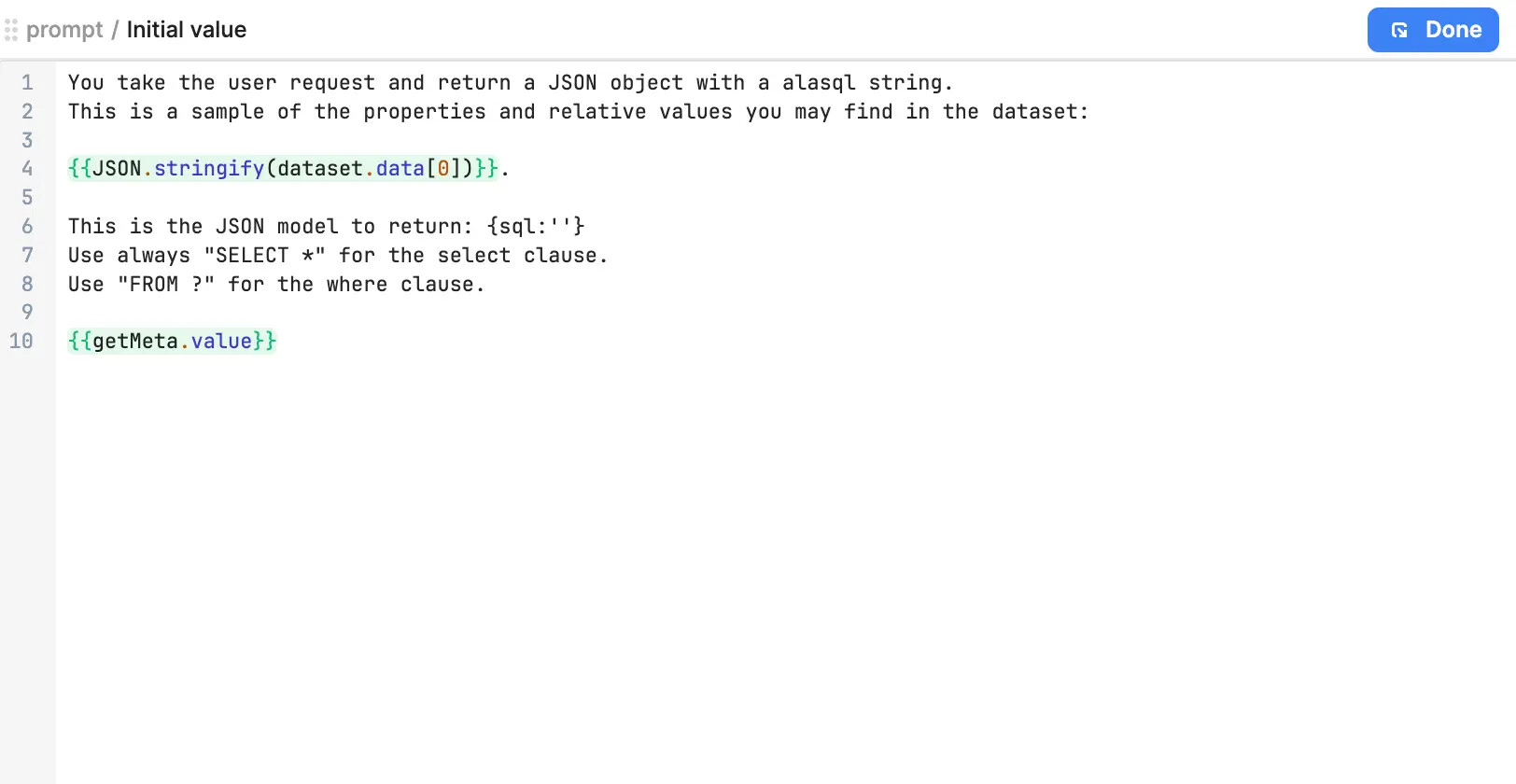

We need to prepare a Retool variable for the prompt part we’ll use in the OpenAI query. This is the “system” role you typically include as the first item in the array of messages for a “chat/completion” call.

Aside from the usual general prompt settings (how the model should act), we already start passing a bit of context (more about it later), a sample data point, and some rules to instruct how we want the payload in the response.

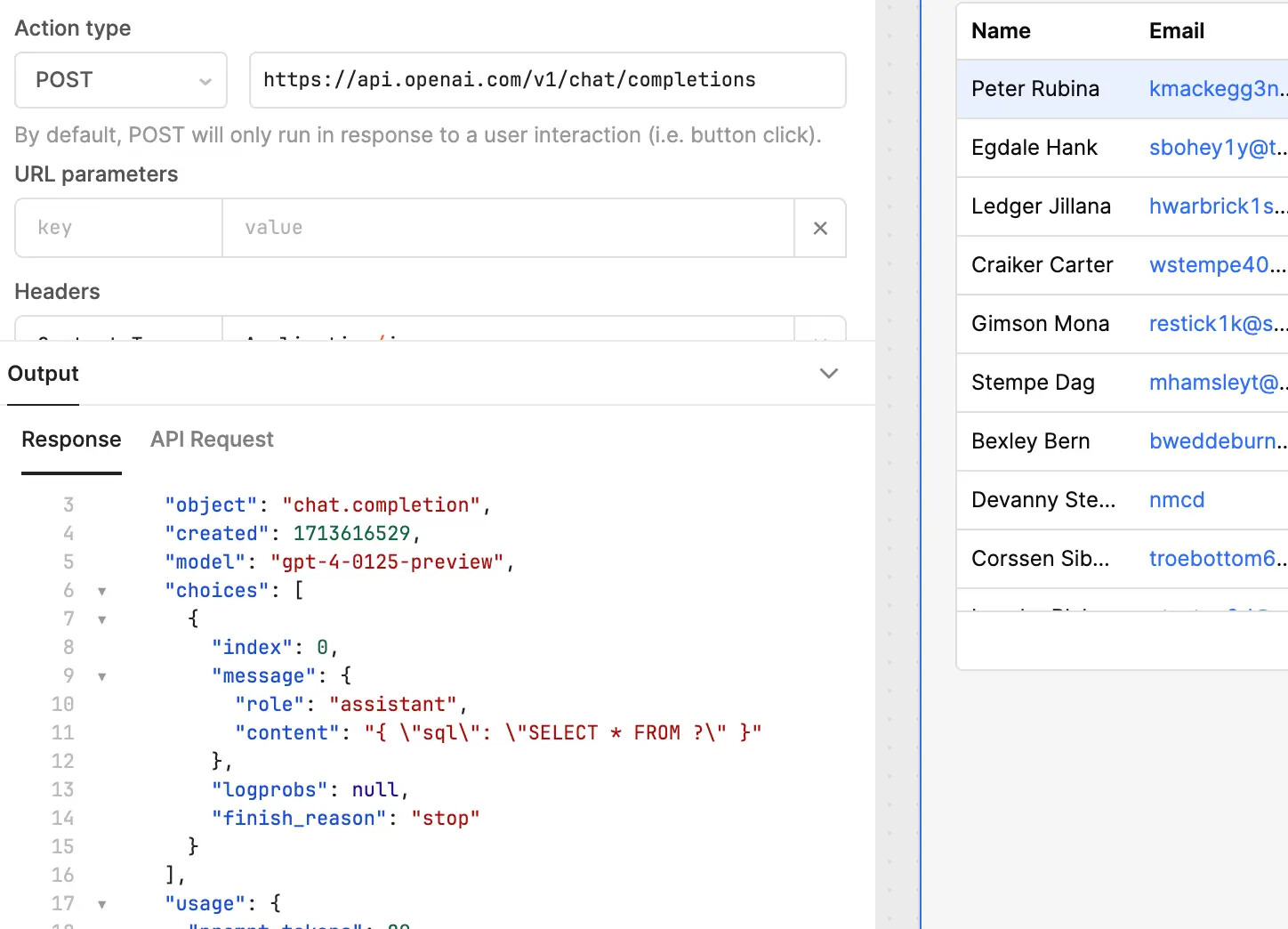

Now it’s time to configure the OpenAI query. We’re using the REST API Resource that gives full flexibility. These are the bits you need to configure properly:

If you run the query, you should already see a meaningful response:

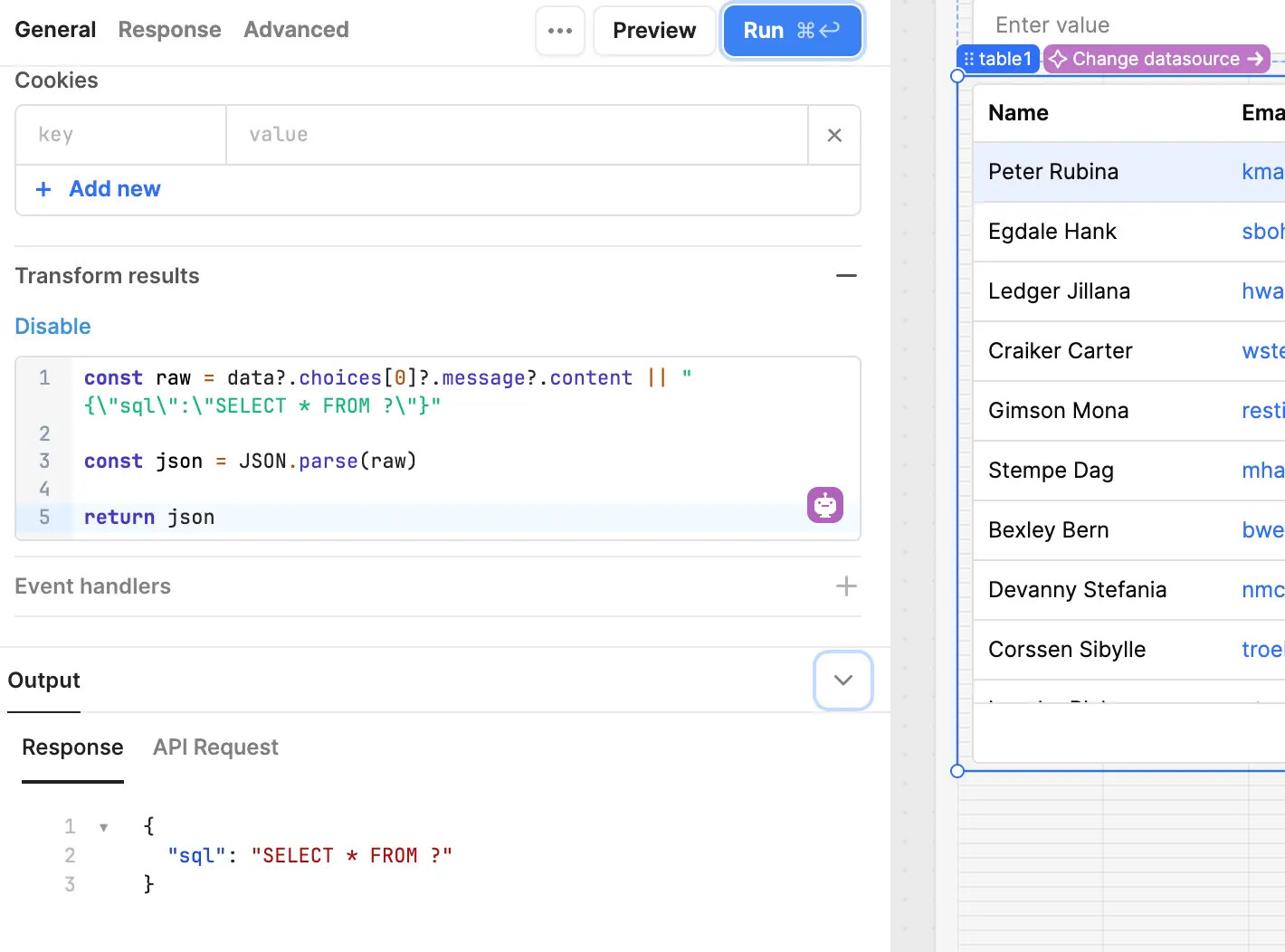

In order to ease our next integration step, let’s enable the transformation results panel in the OpenAI query, because all we need is the SQL string:

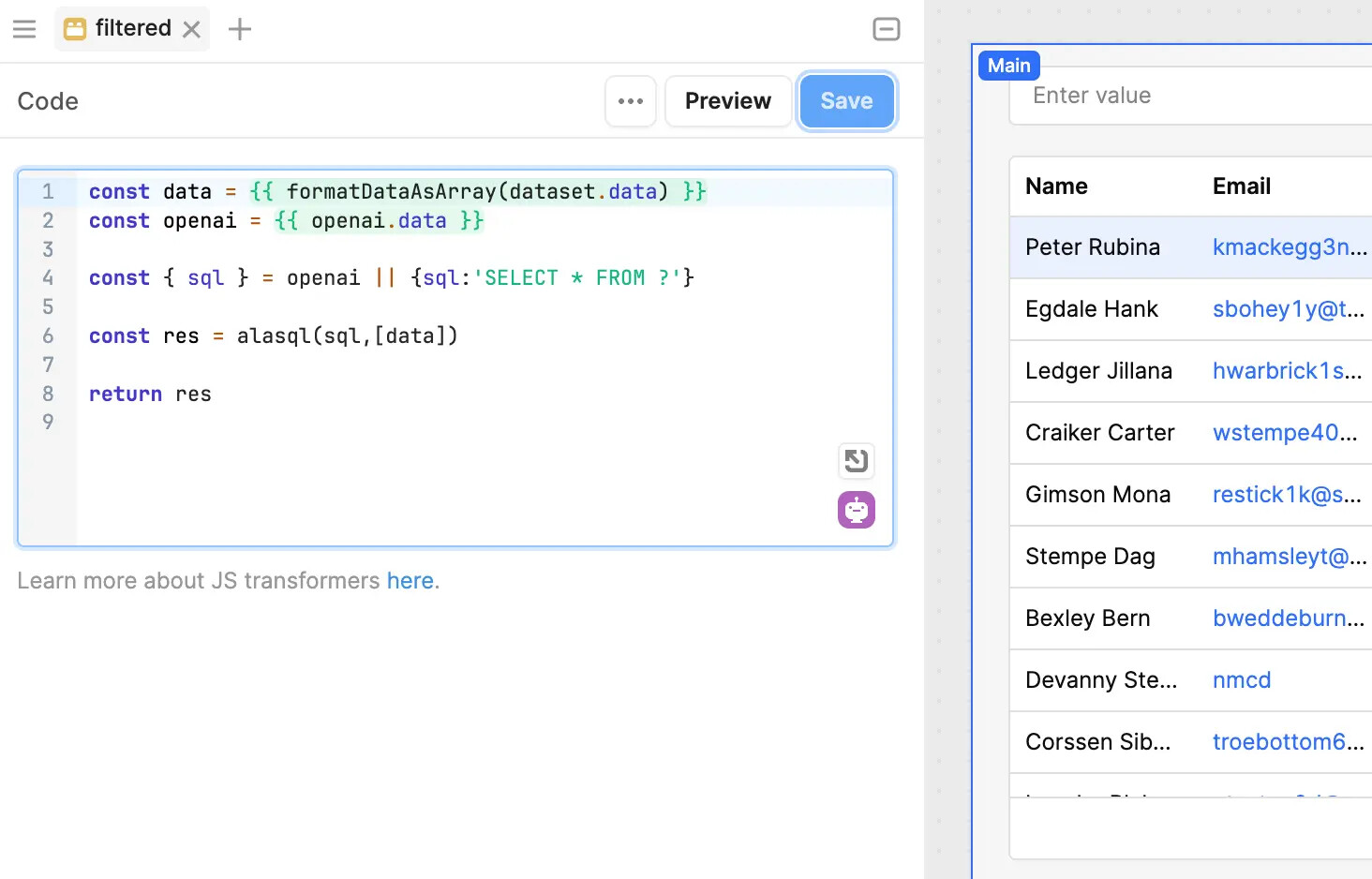

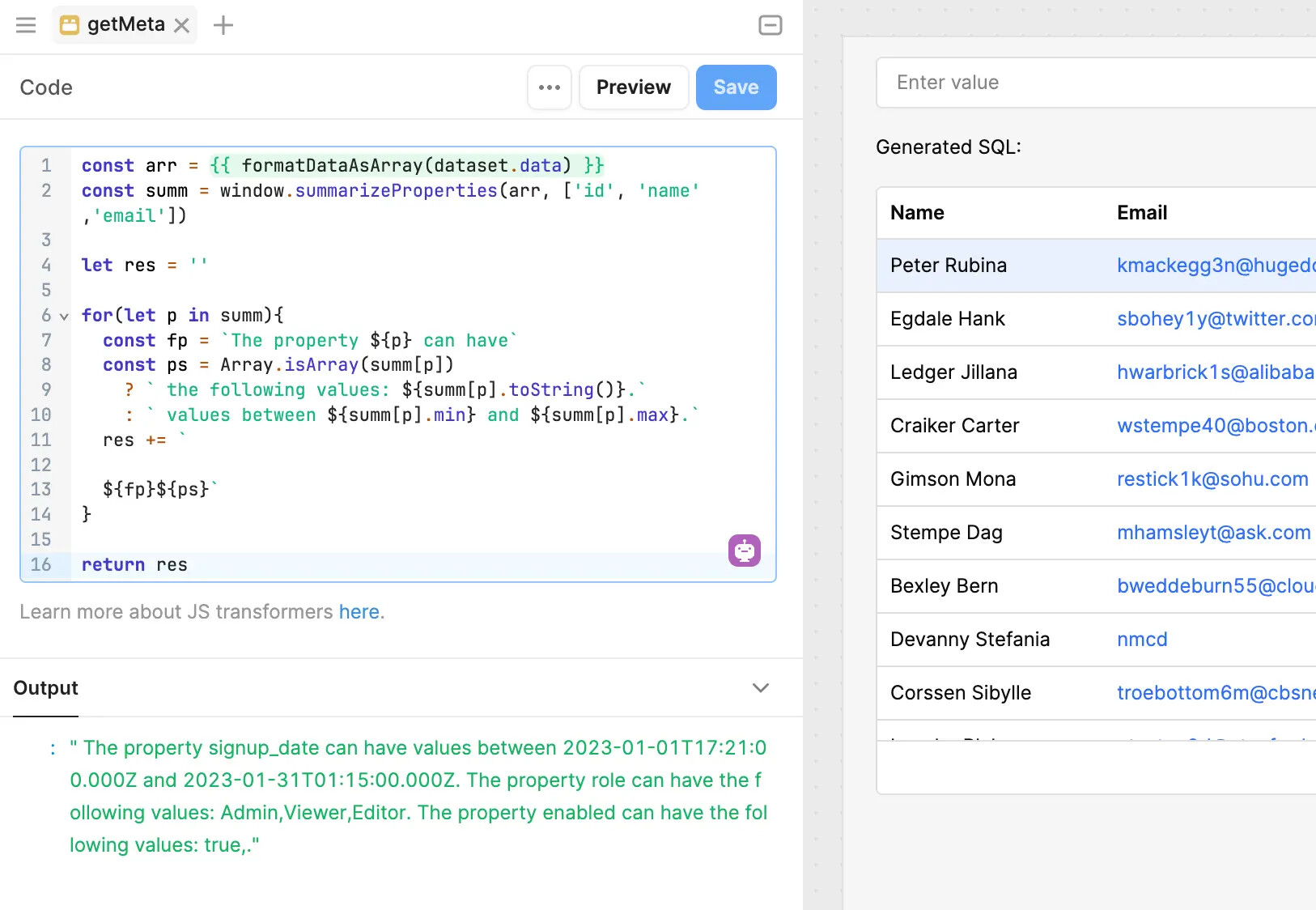

It’s time for the glue. We have all the pieces, but they don't talk to each other yet. Let’s create a Retool Transformer by adding the following code:

Doing this should be pretty self-explanatory—we basically take the original dataset and filter it using AlaSQL, which is already included in Retool.

Now we can change the table source from the dataset to filtered and we’re almost done!

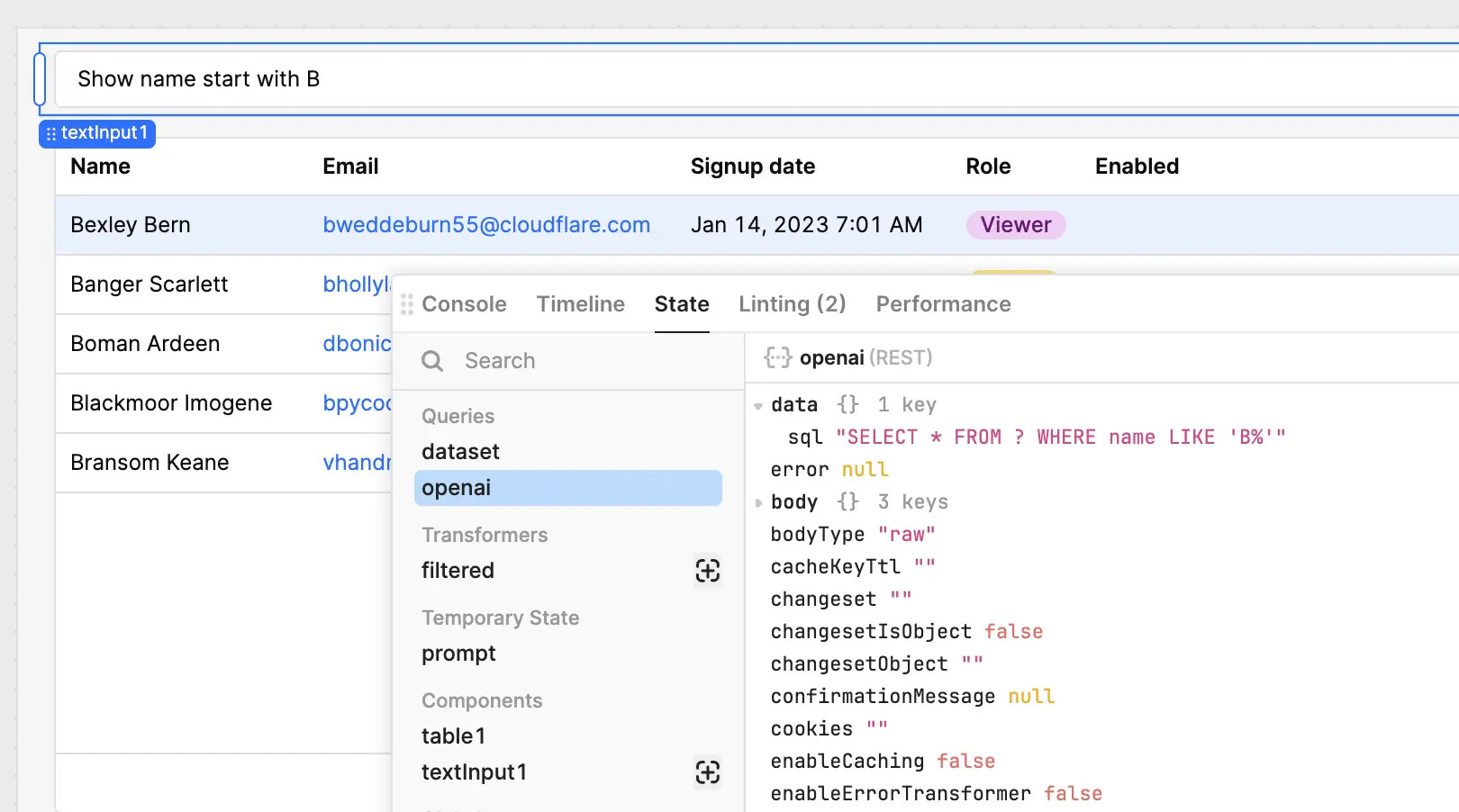

Just add a TextInput and trigger the OpenAI query on submit action, and you should see the magic happen:

The user question is converted into SQL that’s used with AlaSQL to filter the original dataset.

Now, in order to make the prompt smarter, we need to add a bit of context to it. Consider that the more well-structured context GPT-4 has, the better the answers are likely to be.

We already gave it a sample data point that’s useful for understanding the data properties and data types.

But we can give it more. Here’s an updated version of the prompt, adding an additional variable, getMeta:

getMeta is a Retool Transformer that returns information about the dataset. In our sample dataset, this information can include:

You can see there’s a function used there, summarizeProperties, that’s in the Preload JS section of the app.

It analyzes the dataset and returns a summarization according to the value type. Be sure to specify properties you don’t want to summarize— for instance, for things that wouldn’t make sense, such as “name” or “email”—so it doesn't pollute the prompt. (And, of course, consider your dataset and privacy and compliance requirements before handing everything over to OpenAI or any model provider.)

With such additional context, GPT-4 is often better able to answer questions even when the user isn’t perfectly precise in what they’re asking.

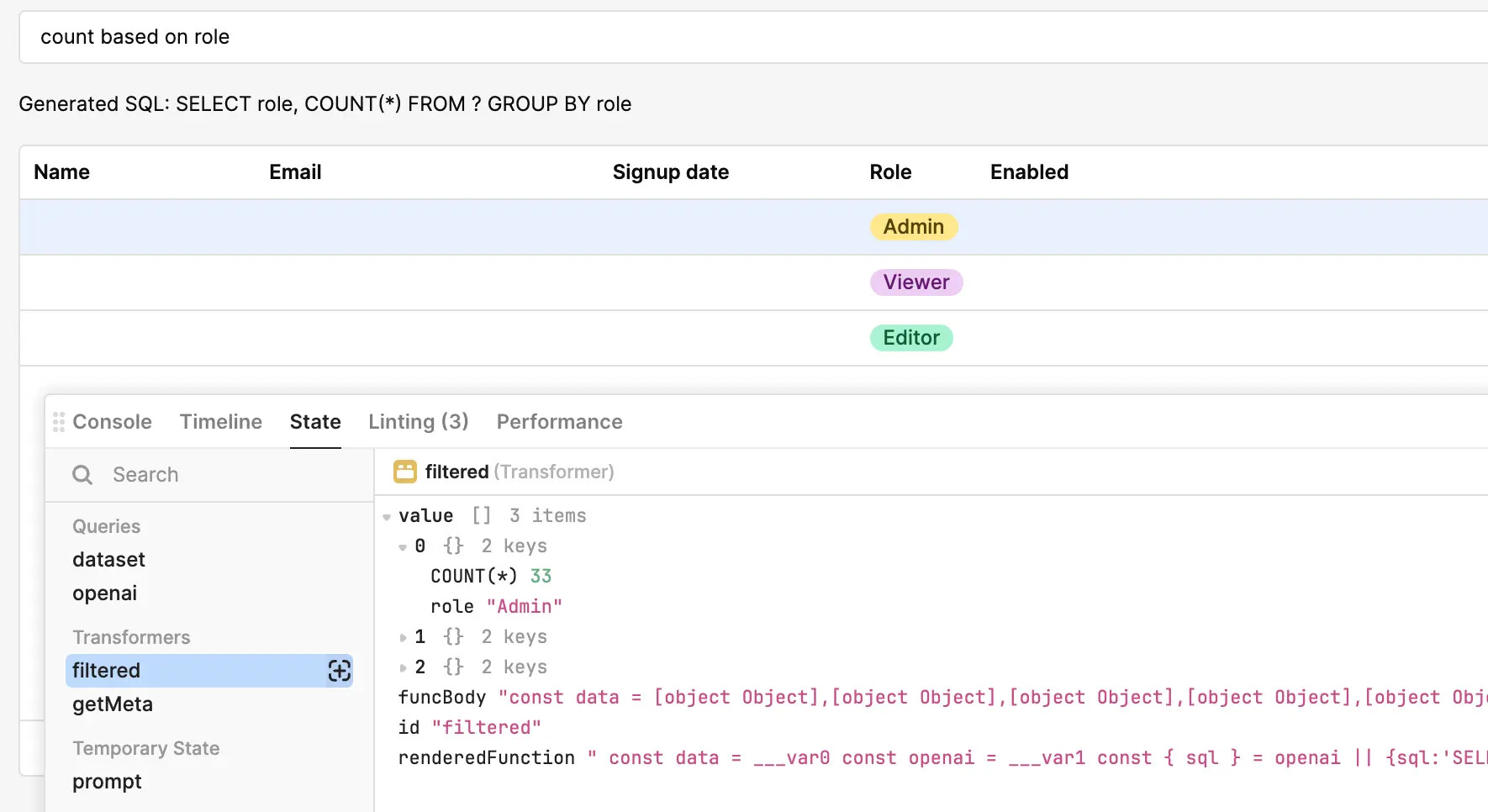

Outside of simple SELECT queries, you can do something even more interesting with this set up.

You can ask for aggregations and calculations too.

AlaSQL is able to “GROUP BY”, “COUNT”, “SUM”, “AVG”, etc. This means you can get not only a filtered version of the dataset, but also a restructured version of it.

This opens up tons of possibilities. At the same time, it brings more complexity in the Retool context, because now our table is not able to display our new dataset properly:

The whole technique revolves around AlaSQL, which runs locally, filtering an already fetched data resource.

For this reason, there are no security issues because the database is not involved at all. Trying to do prompt injection, even instructing AlaSQL to alter the dataset, won’t create any real issues. The worst that can happen is the table won’t render properly.

At the same time, this might also be a limitation. You cannot really “ask” data from your database. For big tables, where you need to paginate or do partial fetches, this technique is not going to work as expected. The bottom line is: it’s just a filter on the already pulled data.

We believe that we're just beginning to explore the potential of AI-native user interfaces. There’s a huge opportunity to rethink many of the current paradigms to make them much more efficient, friendly, and fun.